Heidi Alexander, a prominent advocate for child safety online, recently stated that proposed age verification measures are just the beginning of a broader regulatory effort to protect minors from digital threats1. The UK’s Online Safety Act (2023) will enforce age checks for harmful content starting July 2025, but gaps remain in addressing AI-driven risks like deepfake harassment and predatory chatbots2.

Regulatory Landscape and Technical Challenges

The UK’s Online Safety Act mandates age verification for pornography but exempts messaging apps and AI chatbots, creating potential loopholes for exploitation2. Ofcom will enforce these rules, though critics argue the legislation lags behind emerging threats like AI-generated CSAM. In the US, states like Texas and Utah now require parental consent for social media access, with Louisiana implementing strict age verification for adult sites3. These measures face legal challenges over privacy concerns, particularly regarding data collection from minors.

Technical implementation remains contentious. The ITIF recommends interoperable digital IDs and AI-based age estimation tools, while UNICEF advocates for “safety by design” principles in tech development4. However, ethical concerns persist around biometric data collection and algorithmic bias in age-detection systems.

Emerging Threats and Mitigation Strategies

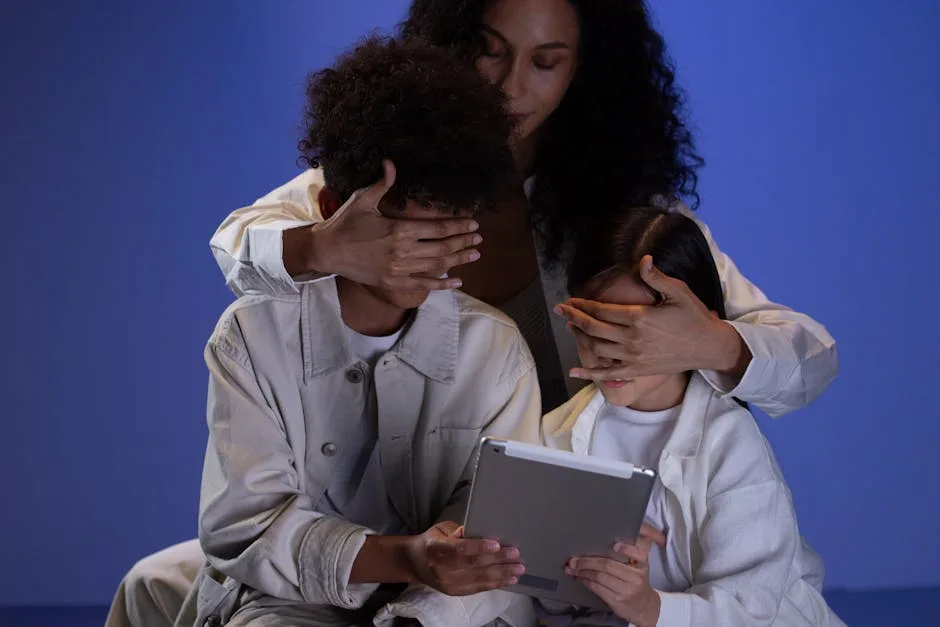

AI-enabled threats dominate current risks: 54.6% of teens experience cyberbullying, with 15% admitting to bullying others5. Deepfake tools exacerbate this; Rhode Island schools report cases where AI manipulated images for harassment6. Predators increasingly use generative AI to impersonate children or groom victims, accounting for 78% of cases targeting girls7.

| Threat Type | Prevalence | Mitigation |

|---|---|---|

| AI-Generated CSAM | Rising (NCMEC) | Content fingerprinting |

| Deepfake Bullying | 15% of schools (NEA) | Media literacy programs |

| Chatbot Grooming | 82% male perpetrators | Behavioral analysis APIs |

Actionable Recommendations

For organizations handling minors’ data:

- Implement COPPA-compliant age gates with cryptographic proof-of-age tokens

- Deploy AI content moderators trained on CSAM indicators (e.g., Microsoft PhotoDNA)

- Adopt device-level “child flags” as proposed by ITIF to standardize parental controls

The FCC’s CIPA guidelines now require schools to filter harmful content for E-rate funding eligibility, though enforcement varies8. Technical teams should prioritize:

“Safety by design over retroactive fixes – build protections into architecture from day one.”

– UNICEF WeProtect Framework4

Conclusion

While new regulations mark progress, their technical execution requires careful balancing of privacy, security, and usability. Ongoing AI advancements will necessitate adaptive policies and cross-border cooperation to protect minors effectively.

References

- “More rules being considered to keep children safe online,” BBC News, 2025. [Online]. Available: https://www.bbc.com/news/articles/cp82447l84ko

- “Online Safety Act 2023,” UK Parliament, 2023. [Online]. Available: https://www.legislation.gov.uk/ukpga/2023/50/contents/enacted

- “State Age Assurance Laws,” AVP Association, 2025. [Online]. Available: https://avpassociation.com/us-state-age-assurance-laws-for-social-media/

- “Keeping Children Safe Online,” UNICEF, 2025. [Online]. Available: https://www.unicef.org/protection/keeping-children-safe-online

- “Internet Safety for Kids,” SafeWise, 2025. [Online]. Available: https://www.safewise.com/resources/internet-safety-kids

- “AI Deepfakes in Schools,” NEA Rhode Island, 2025. [Online]. Available: https://www.neari.org/advocating-change/new-from-neari/ai-deepfakes-disturbing-trend-school-cyberbullying

- “Online Exploitation Trends,” NCMEC, 2025. [Online]. Available: https://www.missingkids.org

- “Children’s Internet Protection Act,” FCC, 2025. [Online]. Available: https://www.fcc.gov/consumers/guides/childrens-internet-protection-act